Computers Can Now Recognize 21 Different Facial Expressions; Helps Track Origins Of Emotions

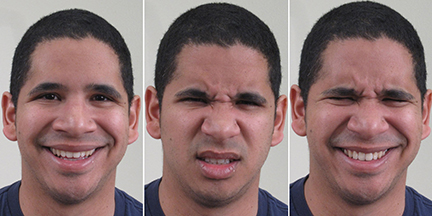

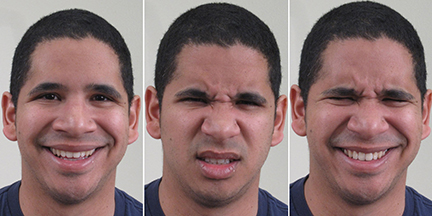

Ohio State University's research team has made it possible for computers to recognize 21 distinct, complex and even contradictory facial expressions. So the next time you are feeling sad and angry at the same time or are feeling disgusted but even happy and your face reflects that perfect, unique expression hard for anyone to understand, the computer can be of help. Taking a step ahead in the cognitive analysis research, the OSU researchers have more than tripled the number of expressions documented till date. By categorizing the different emotions that human faces show into 21 categories based on the consistency of how people move their facial muscles a certain way, the researchers were able to feed data to the machines for it to recognize them accurately.

Cognitive analysis researchers have been playing with only 6 basic emotions till date. These include -

happy, sad, fearful, angry, surprised and disgusted. But, we now know that there's more to expressions than those broad categories. Therefore, the researchers came up with a computational model that can map emotion in the brain with greater precision than ever before. By tracking these expressions all the way to the origin of emotions in brain, a lot of problems could be solved. Moving further, this model could help in the diagnosis of autism or post-traumatic stress disorder.

To carry out the research, the researchers took photographs of 230 volunteers which include 130 female and 100 male individuals, while they reacted to different verbal cues. For example - "You just got some good news unexpectedly" would mean happily surprised. Whereas, if you watch a gross movie which is very funny, it would result into the 'happily disgusted' expression or if someone close to you behaves badly, that would mean 'sadly angry'. They termed these combinations as "compound emotions". By mapping this data with the movement of facial muscles, the researchers were successful in making the computers recognize such 21 expressions with great precision.

The team of researchers working on this includes - Aleix Martinez, a cognitive scientist and associate professor of electrical and computer engineering and doctoral students Shichuan Du and Yong Tao.

Using the many photographs 5,000 images, the researchers identified the prominent landmarks for facial muscles, such as outer edge of the eyebrow or corners of the mouth. Psychologist Paul Ekman's Facial Action Coding System - FACS, was the standard tool they used for the body language analysis. In near future, cognitive scientists shall link the facial expressions with emotions and gather data about genes and neural pathways to determine the origin of emotions in human brain. We've already told you about the potential applications in medical diagnosis.

What are your thoughts on the applications of this technology in robotics? Share with us in comments below.

Source: #-Link-Snipped-#

Cognitive analysis researchers have been playing with only 6 basic emotions till date. These include -

happy, sad, fearful, angry, surprised and disgusted. But, we now know that there's more to expressions than those broad categories. Therefore, the researchers came up with a computational model that can map emotion in the brain with greater precision than ever before. By tracking these expressions all the way to the origin of emotions in brain, a lot of problems could be solved. Moving further, this model could help in the diagnosis of autism or post-traumatic stress disorder.

To carry out the research, the researchers took photographs of 230 volunteers which include 130 female and 100 male individuals, while they reacted to different verbal cues. For example - "You just got some good news unexpectedly" would mean happily surprised. Whereas, if you watch a gross movie which is very funny, it would result into the 'happily disgusted' expression or if someone close to you behaves badly, that would mean 'sadly angry'. They termed these combinations as "compound emotions". By mapping this data with the movement of facial muscles, the researchers were successful in making the computers recognize such 21 expressions with great precision.

The team of researchers working on this includes - Aleix Martinez, a cognitive scientist and associate professor of electrical and computer engineering and doctoral students Shichuan Du and Yong Tao.

Using the many photographs 5,000 images, the researchers identified the prominent landmarks for facial muscles, such as outer edge of the eyebrow or corners of the mouth. Psychologist Paul Ekman's Facial Action Coding System - FACS, was the standard tool they used for the body language analysis. In near future, cognitive scientists shall link the facial expressions with emotions and gather data about genes and neural pathways to determine the origin of emotions in human brain. We've already told you about the potential applications in medical diagnosis.

What are your thoughts on the applications of this technology in robotics? Share with us in comments below.

Source: #-Link-Snipped-#

0