Smartphones with Eye Gesture Control Could Be Possible – Suggest Researchers

A team of researchers from MIT, University of Georgia and Max Planck Institute for Informatics is trying to make eye gesture control feasible in mobile devices. They are crowdsourcing the collection of information based on the focusing position of the user's eyes on the screen of smart devices. It is collected through different applications over numerous devices. The aim is to teach software to co-ordinate actions with the eye movements of the users. If done successfully, mobile devices with eye gesture control will no longer be an impossible feat.

The team has successfully trained the software to track where the user is looking at the device’s screen with an accuracy of 1 cm in mobile phones and 1.7cm on tablets. However, the level of accuracy needs improvement if it has to be applied in mobile devices. Aditya Khosla, a graduate of MIT and also one of the contributors recently presented their work in CVPR 2016. A lot of useful suggestions and feedback has been received by them and they are moving ahead to make this technology achievable.

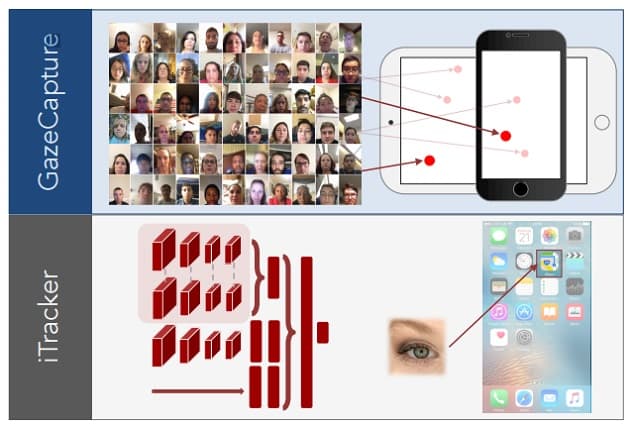

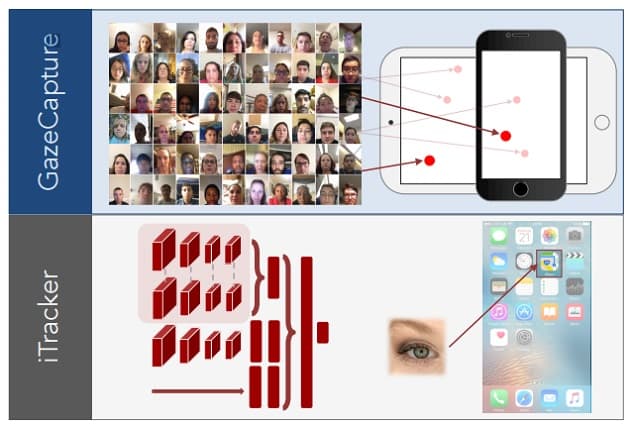

They first used an iPhone app called GazeCapture to collect data of hand-eye co-ordination of the user and correlated it with the position of dots that were appearing on the screen. The collected information was then used to train a software named iTracker. It was based on capturing the image of a user’s face based on the factors like positions and directions of their eye – gaze, head, etc. The results were quite promising and showed improvement with more evaluation of data sets.

Overview of GazeCapture and iTracker - former is used to collect information while later is a trained software

In order to improve the accuracy, more data will be required for analysis and for teaching the software. At present, there are total 1,500 users of GazeCapture app. They believe that a minimum 10,000 user’s data will be required to achieve the accuracy level for implementing the technology. However, with the present accuracy level and the necessary hardware requirements, the technology isn’t feasible to be paired up with the touch devices. Thus, the team members are trying to improve the accuracy more and more to bring this technology into effect.

Eye operated devices could be really helpful in fields like medical diagnosis. It might be helpful in healing conditions of schizophrenia and concussions and other eye-tracking dysfunction diseases. Because of its huge scope and applications, an attempt to achieve considerable accuracy is being carried on. With time, it would be achievable and will lead to the development of new smart devices with eye-gesture control.

Source - #-Link-Snipped-# | #-Link-Snipped-#

The team has successfully trained the software to track where the user is looking at the device’s screen with an accuracy of 1 cm in mobile phones and 1.7cm on tablets. However, the level of accuracy needs improvement if it has to be applied in mobile devices. Aditya Khosla, a graduate of MIT and also one of the contributors recently presented their work in CVPR 2016. A lot of useful suggestions and feedback has been received by them and they are moving ahead to make this technology achievable.

They first used an iPhone app called GazeCapture to collect data of hand-eye co-ordination of the user and correlated it with the position of dots that were appearing on the screen. The collected information was then used to train a software named iTracker. It was based on capturing the image of a user’s face based on the factors like positions and directions of their eye – gaze, head, etc. The results were quite promising and showed improvement with more evaluation of data sets.

Overview of GazeCapture and iTracker - former is used to collect information while later is a trained software

In order to improve the accuracy, more data will be required for analysis and for teaching the software. At present, there are total 1,500 users of GazeCapture app. They believe that a minimum 10,000 user’s data will be required to achieve the accuracy level for implementing the technology. However, with the present accuracy level and the necessary hardware requirements, the technology isn’t feasible to be paired up with the touch devices. Thus, the team members are trying to improve the accuracy more and more to bring this technology into effect.

Eye operated devices could be really helpful in fields like medical diagnosis. It might be helpful in healing conditions of schizophrenia and concussions and other eye-tracking dysfunction diseases. Because of its huge scope and applications, an attempt to achieve considerable accuracy is being carried on. With time, it would be achievable and will lead to the development of new smart devices with eye-gesture control.

Source - #-Link-Snipped-# | #-Link-Snipped-#

0