'Trial And Error' Is How UC Berkeley Robot BRETT Learns Motor Skills

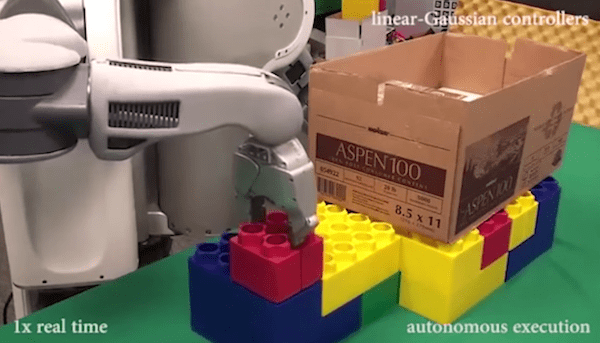

You must've seen a kid play with lego blocks and learning to put them together on their own by the good ol' 'trial and error' method. Well, that's how the UC Berkeley robot learns various motor skills. A team of researchers from University of California has developed algorithms that empower robots to learn on their own. What is now being called a major step forward in the field of AI, this technique of reinforcement learning helps a robot complete different tasks without pre-programmed instructions of how to do it or where to do it. Robot BRETT is a PR2 bot (Willow Garage Personal Robot 2) that uses the 'deep learning' algorithm to master the tasks of fixing lego blocks properly, placing clothes hanger on a rack, screwing a bottle's cap, assembling a toy plane and much more.

The electrical and computer engineers working on this project developed this software that gives the robot the ability to learn without external help in an unpredictable environment. If the robot is able to adapt to its surrounding while it learns new things, we can put it in real-life settings so that they do real jobs. This can be achieved by the UC Berkeley engineers method of 'deep learning' which derives inspiration from human brain's neural circuitry. This is radically opposite to the conventional method of feeding the robot with 'n' number of simulated situations and letting it know how to find its way through them.

Just like humans learn from experience, a robot has deep learning programs in the form of 'neural nets' which raw sensory data (images, sound etc.) can be processed via artificial neurons. When presented with a task requiring motor skills, the robot's algorithm works with a 'reward function' that provides a score of how well BRETT is doing its task. From this score, the robot knows which movements are better to do the job at hand and keeps changing its method till the score is high enough to complete the task.

Take a look at the video to see BRETT in action:

The team is hopeful that over the next decade we will be see significantly advanced capability in robots. That's a frontier that scientists and roboticists around the world hope to push forward.

What are your thoughts about robots learning on their own? Share with us in comments below.

Source: #-Link-Snipped-#

The electrical and computer engineers working on this project developed this software that gives the robot the ability to learn without external help in an unpredictable environment. If the robot is able to adapt to its surrounding while it learns new things, we can put it in real-life settings so that they do real jobs. This can be achieved by the UC Berkeley engineers method of 'deep learning' which derives inspiration from human brain's neural circuitry. This is radically opposite to the conventional method of feeding the robot with 'n' number of simulated situations and letting it know how to find its way through them.

Just like humans learn from experience, a robot has deep learning programs in the form of 'neural nets' which raw sensory data (images, sound etc.) can be processed via artificial neurons. When presented with a task requiring motor skills, the robot's algorithm works with a 'reward function' that provides a score of how well BRETT is doing its task. From this score, the robot knows which movements are better to do the job at hand and keeps changing its method till the score is high enough to complete the task.

Take a look at the video to see BRETT in action:

The team is hopeful that over the next decade we will be see significantly advanced capability in robots. That's a frontier that scientists and roboticists around the world hope to push forward.

What are your thoughts about robots learning on their own? Share with us in comments below.

Source: #-Link-Snipped-#

0