Paperfold Is A Shape Changing Smartphone Prototype Developed At Queen's University

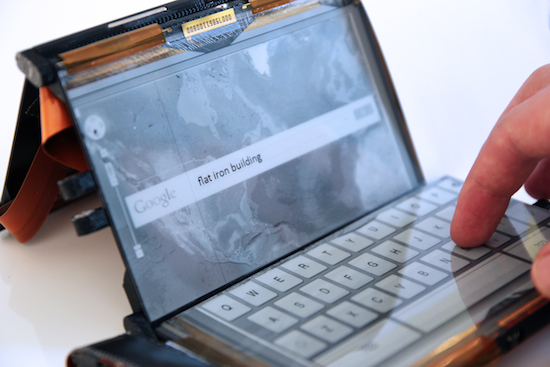

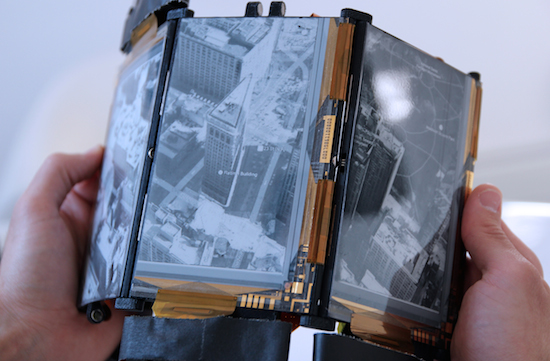

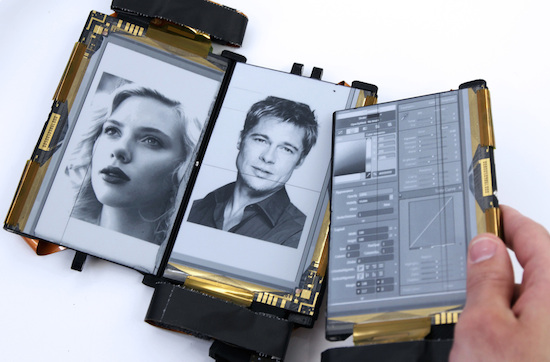

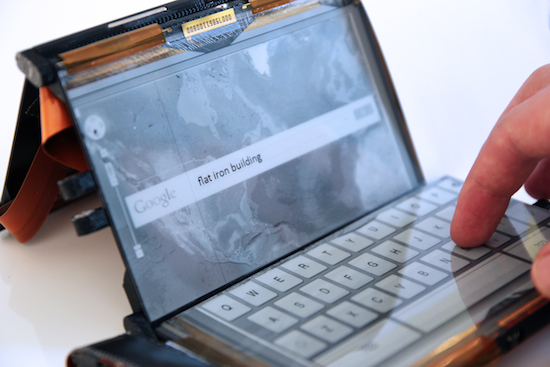

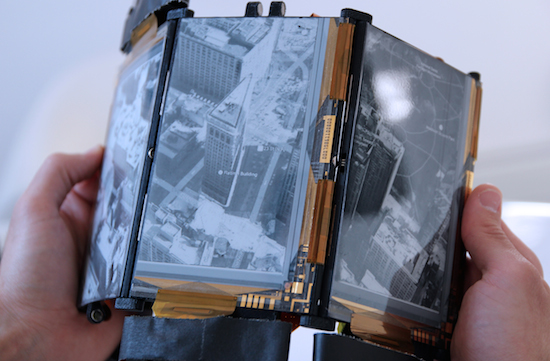

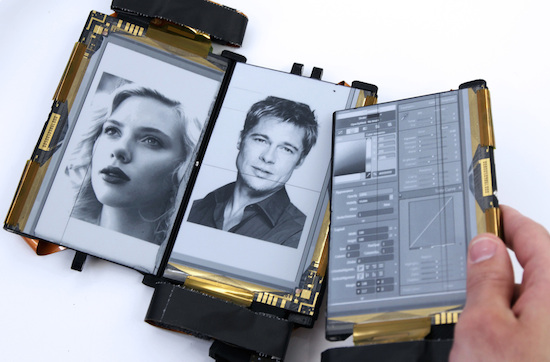

A revolutionary device called the "Paperfold" - a shape changing, foldable smartphone is being developed at Human Media Lab at Queen's University. Featuring 3 electrophoretic displays that can be folded and opened up, the Paperfold smartphone brings extra screen space well-suited for multi-tasking. Professor Roel Vertegaal and his student Antonio Gomes from the School of Computing at the University will publicly unveil their first prototype device at the ACM CHI 2014 conference in Toronto today. As you can see from the pictures below, the three displays can be detached and joined in various ways to give the user the feel of holding a foldout map or a tablet or even a notebook with a keyboard.

With Paperfold, users can make each display tile act as an independent entity or as a part of a single system. The device will recognize its own shape automatically and change the way it displays its graphics depending upon the new form factor. The Touch and Inertial Measurement Unit (IMU) sensors embedded in each display tile allow users to dynamically manipulate content.

So far, the developers have been able to flatten the 3 displays to make the smartphone work like a spread-out Google map and if you fold it into a convex globe, it shows the same map in a Google Earth -like view. That's not it. Users can also fold the Paperfold device into the shape of a 3D building, so that is uses the Google SketchUp model of that building and turn the device into an architectural model that can be 3D printed.

Current solutions exploring book form factors, often involve rigid dual-screen form factors that can orient around one axis. And this makes, the Paperfold device truly unique. The newly developed C# application interprets changes in orientation, connection and touch input data reported by each individual tile. It also runs the applications displayed on PaperFold, sending segmented images to the display tiles depending on the configuration of the tiles, as well user input.

That's all we know right now. We will keep you updated with more as soon as we find out. Do let us know what you think about the Paperfold device in comments below.

Source: #-Link-Snipped-#

With Paperfold, users can make each display tile act as an independent entity or as a part of a single system. The device will recognize its own shape automatically and change the way it displays its graphics depending upon the new form factor. The Touch and Inertial Measurement Unit (IMU) sensors embedded in each display tile allow users to dynamically manipulate content.

So far, the developers have been able to flatten the 3 displays to make the smartphone work like a spread-out Google map and if you fold it into a convex globe, it shows the same map in a Google Earth -like view. That's not it. Users can also fold the Paperfold device into the shape of a 3D building, so that is uses the Google SketchUp model of that building and turn the device into an architectural model that can be 3D printed.

Current solutions exploring book form factors, often involve rigid dual-screen form factors that can orient around one axis. And this makes, the Paperfold device truly unique. The newly developed C# application interprets changes in orientation, connection and touch input data reported by each individual tile. It also runs the applications displayed on PaperFold, sending segmented images to the display tiles depending on the configuration of the tiles, as well user input.

That's all we know right now. We will keep you updated with more as soon as we find out. Do let us know what you think about the Paperfold device in comments below.

Source: #-Link-Snipped-#

0