Human Arm Sensors Make Robots More Intelligent For Manufacturing Plants

Researchers over at Georgia Institute of Technology have come up with a new control system that makes use of human arm sensors to make robots used in manufacturing plants more intelligent. Innovation in Robotics has made it possible to make manufacturing smarter and safer. The human arm sensors will be used to train robots to mimic, respond to, and even predict the movements such that man and machine work together effortlessly. “It turns into a constant tug of war between the person and the robot,” explains Billy Gallagher, a recent Georgia Tech Ph.D. graduate in robotics who led the project. “Both react to each other’s forces when working together. The problem is that a person’s muscle stiffness is never constant, and a robot doesn’t always know how to correctly react.”

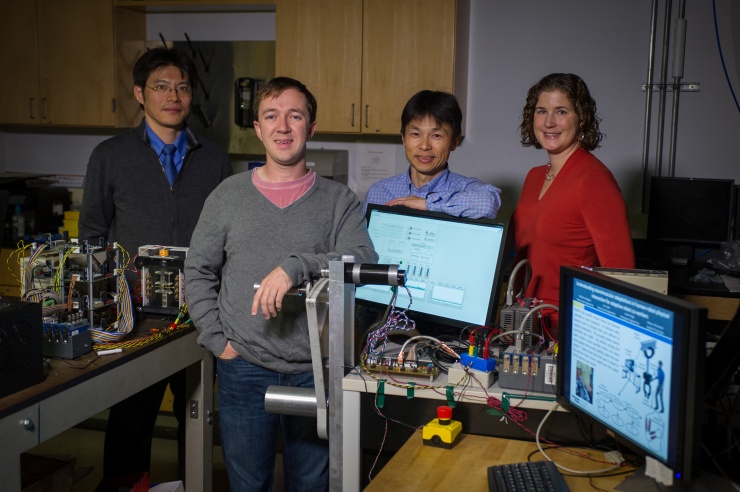

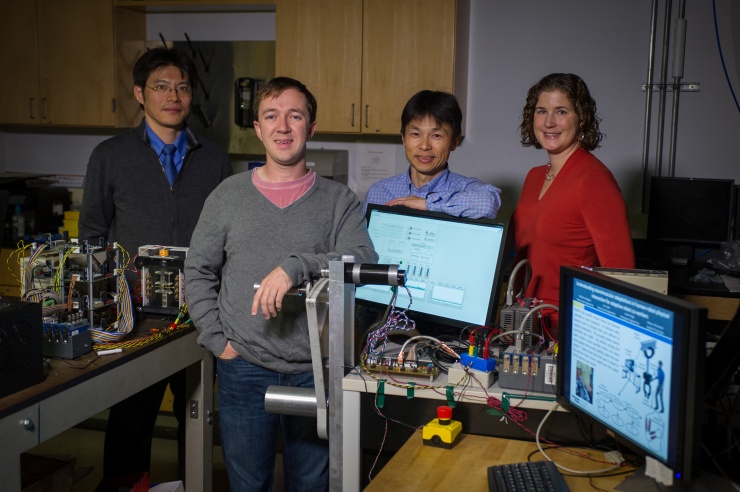

Other than Mr. Gallagher, the research team also includes Associate Professor Minoru Shinohara (Applied Physiology), Assistant Professor Karen Feigh (Aerospace Engineering) and Professor Emeritus Wayne Book (Mechanical Engineering). The team has shared that the current robot systems get confused with the mixed signals. For example - After human operators shift the lever forward or backward, the robot recognizes the command and moves appropriately. But when they want to stop the movement and hold the lever in place, people tend to stiffen and contract muscles on both sides of their arms. This creates a high level of co-contraction. “The robot becomes confused. It doesn’t know whether the force is purely another command that should be amplified or ‘bounced’ force due to muscle co-contraction,” said Jun Ueda, Gallagher’s advisor and a professor in the Woodruff School of Mechanical Engineering. “The robot reacts regardless.”

The robot responds to that bounced force, creating vibration. The human operators also react, creating more force by stiffening their arms. The situation and vibrations become worse. The Georgia Tech system eliminates the vibrations by using sensors worn on a controller’s forearm. The devices send muscle movements to a computer, which provides the robot with the operator’s level of muscle contraction. The system judges the operator's physical status and intelligently adjusts how it should interact with the human. The result is a robot that moves easily and safely.

Take a look at the following video put together by the team -

Where else do you think can we find the application of the human arm sensors in robotics? Share your thoughts with us in comments below.

Other than Mr. Gallagher, the research team also includes Associate Professor Minoru Shinohara (Applied Physiology), Assistant Professor Karen Feigh (Aerospace Engineering) and Professor Emeritus Wayne Book (Mechanical Engineering). The team has shared that the current robot systems get confused with the mixed signals. For example - After human operators shift the lever forward or backward, the robot recognizes the command and moves appropriately. But when they want to stop the movement and hold the lever in place, people tend to stiffen and contract muscles on both sides of their arms. This creates a high level of co-contraction. “The robot becomes confused. It doesn’t know whether the force is purely another command that should be amplified or ‘bounced’ force due to muscle co-contraction,” said Jun Ueda, Gallagher’s advisor and a professor in the Woodruff School of Mechanical Engineering. “The robot reacts regardless.”

The robot responds to that bounced force, creating vibration. The human operators also react, creating more force by stiffening their arms. The situation and vibrations become worse. The Georgia Tech system eliminates the vibrations by using sensors worn on a controller’s forearm. The devices send muscle movements to a computer, which provides the robot with the operator’s level of muscle contraction. The system judges the operator's physical status and intelligently adjusts how it should interact with the human. The result is a robot that moves easily and safely.

Take a look at the following video put together by the team -

0