Researchers Triumph In Creating A Model That Accurately Emulates The Human Brain

Artificial computation and our neural effort go hand in hand to act as a supporting system for the other. While humans made a silicon mind to run faster and process greater algorithm, it could not have been made at the first place if humans didn’t exist. However, the point is if we are asking for a coexistence sometimes in the near future we need to educate the machines according to our mind, make it comparable to our brain. Significantly, a research team from Northwestern University has developed a computational model that mimics an average human on a standard intelligence test.

According to Ken Forbus, Professor of Electrical Engineering and Computer Science at Northwestern’s McCormick School of Engineering, the model represents 75TH percentile of American adults, proving its convenience. The model has been developed on CogSketch, a dedicated platform for AI related growth. The Forbus’ spin-off product CogSktech, is specifically designed to comprehend visual problems that calculate immediate, interactive feedback. This platform further integrates an analogy based method structured on the basis of structure-mapping theory written by Northwestern psychology professor Dedre Gentner.

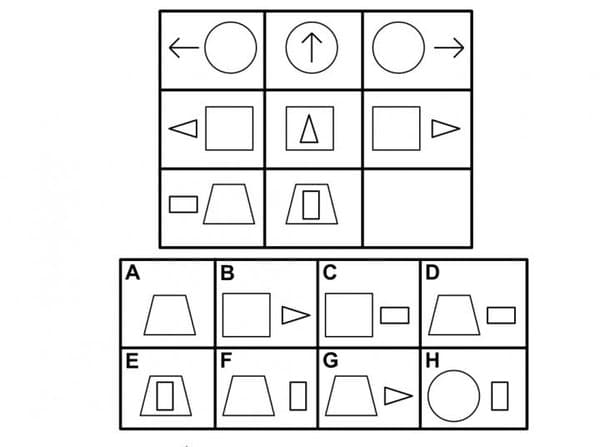

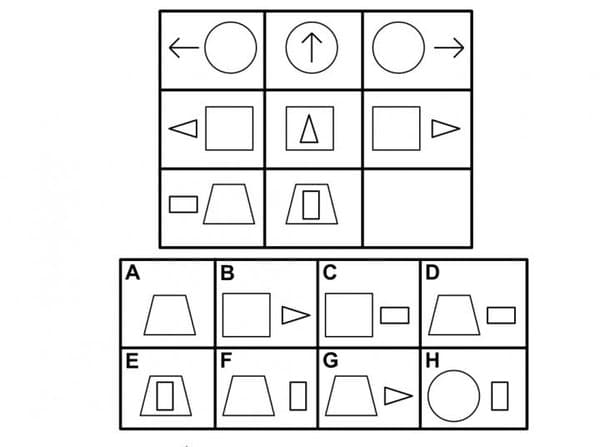

A Set From The Raven's Progressive Matrices Standardized Test

The new born computational technology not only involves complex cognition of human vision but also creates a bridge between human and computers. The team’s effort initially fabricated the model for general visual problem-solving phenomena that was stretched and reconfigured to simulate over Raven's Progressive Matrices, a nonverbal standardized test to weigh verbal reasoning. Describing the test, the source refers that the sample is a matrix with one image missing where the computational model was ordered to select the best one from the six to eight choices.

Taking a reference from Lovett, a researcher at the US Naval Research Laboratory, The Raven’s test is the best predictor to measure the “fluid intelligenceâ€, an ability to weave abstract thinking, reason, identify and solve problems, distinguish between relationships. The team further suggests that to follow human cognition, comprehending relational representations is an utter necessity. The system was able to advance a step towards visual reasoning that if followed after initial recognition could advance the AI based systems. The complete research project has been documented and published in the Psychological Review journal.

Source: Making A.I. Systems that See the World as Humans Do | News | Northwestern Engineering

According to Ken Forbus, Professor of Electrical Engineering and Computer Science at Northwestern’s McCormick School of Engineering, the model represents 75TH percentile of American adults, proving its convenience. The model has been developed on CogSketch, a dedicated platform for AI related growth. The Forbus’ spin-off product CogSktech, is specifically designed to comprehend visual problems that calculate immediate, interactive feedback. This platform further integrates an analogy based method structured on the basis of structure-mapping theory written by Northwestern psychology professor Dedre Gentner.

A Set From The Raven's Progressive Matrices Standardized Test

Taking a reference from Lovett, a researcher at the US Naval Research Laboratory, The Raven’s test is the best predictor to measure the “fluid intelligenceâ€, an ability to weave abstract thinking, reason, identify and solve problems, distinguish between relationships. The team further suggests that to follow human cognition, comprehending relational representations is an utter necessity. The system was able to advance a step towards visual reasoning that if followed after initial recognition could advance the AI based systems. The complete research project has been documented and published in the Psychological Review journal.

Source: Making A.I. Systems that See the World as Humans Do | News | Northwestern Engineering

Replies

You are reading an archived discussion.

Related Posts

Howdy everyone!

I hope the new year is bringing in lot of happiness and joy to you. I thought I'd let you know what's happening behind the scenes at CrazyEngineers...

The mystery behind the explosive Samsung's Galaxy Note 7 devices has finally been unveiled. The company has announced that faults in the battery were the reason behind the Note 7...

After an interval of over a month, Intex Technologies has launched a new smartphone called the Cloud Style 4G. As the name suggests the Intex Cloud Style 4G is a...

Can someone guide with matlab coding for breast cancer detection in MIAS database??

About two months ago, we received a product review request from an Indian technology startup that is directly competing with the likes of Apple TV and Roku with a completely...